By Jason Shepherd, Atym CEO

Atym is pleased to announce that we have joined the Margo community on the heels of contributing our device runtime to seed the open source Ocre project in LF Edge! Other member companies include ABB, Capgemini, Cosmonic, Dianomic, Intel, Microsoft, Rockwell Automation, Schneider Electric, Siemens, SUSE, and ZEDEDA.

It’s attractive for developers and admins to have a consistent experience for secure device and application orchestration spanning the edge continuum, however inherent technical and logistical tradeoffs necessitate coordination between inherently different underlying tools. The Margo initiative is focused on facilitating interoperability between these different tools, enabling the concept of an “orchestrator of orchestrators”.

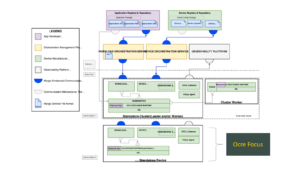

We look forward to exploring with the community how the Ocre runtime can extend the Margo architecture and related APIs to support resource-constrained edge devices such as sensors and controllers that are ubiquitous in the industrial space.

Read on for more detail on the Ocre project, a breakdown of the four key edge Management and Orchestration (MANO) paradigms from the LF Edge taxonomy, and where Ocre fits in.

Project Ocre: Enabling app containers on tiny devices

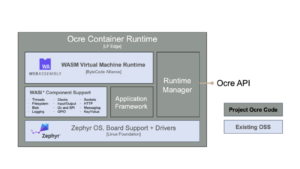

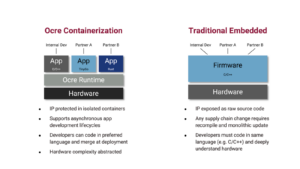

Leveraging WebAssembly and the Zephyr RTOS, Ocre supports OCI-like application containers in a footprint up to 2000x lighter than Linux-based container runtimes such as Docker and Podman. The project’s mission is to revolutionize how applications are developed, deployed, and managed for the billions of constrained edge devices in the field.

Ocre Device Runtime Architecture

Ocre Device Runtime Architecture

Ocre enables developers to “lift and shift” traditional embedded C/C++ code into containers and deploy additional containerized apps alongside them that are written in choice of programming language (e.g. GoLang, Rust). This helps organizations re-use code, prevent silicon lock-in, increase security posture, protect their IP, and manage large fleets of devices and apps in the field.

Developing applications for constrained edge devices with Ocre should be as easy as building cloud-native applications. Applicability spans devices and systems such as sensors, cameras, controllers, IoT gateways, machines, robots, drones, autonomous vehicles and beyond.

Ocre Containerization vs. Traditional Embedded

Ocre Containerization vs. Traditional Embedded

In a related effort, our CTO Stephen Berard is now co-chairing the Embedded and Industrial Special Interest Group (SIG) within the WASI CG which is part of the ByteCode Alliance. The SIG includes collaborators from Siemens, Bosch, Midokura, Intel and others and will define standards and recommendations for utilizing the WebAssembly System Interface (WASI) on resource-constrained devices. In turn, the Ocre community intends to codify the group’s output.

Open standards drive scale and trust

I have spent the last ten years of my career in IoT and Edge shaping both commercial and open source technologies and ecosystems. I’m a big believer in the importance of open source collaboration as an enabler of trusted digital infrastructure. Trusted infrastructure delivers more trusted data, which in turn enables new business models and customer experiences.

Trusted infrastructure also helps foster safety in critical environments, preserve our privacy, and protect us from a growing amount of fake data automated by AI. Further, open source collaboration helps technology providers avoid “undifferentiated heavy lifting” so they can focus their efforts on their domain knowledge and necessarily unique hardware and software.

While technologies like AI and digital twins are fundamentally changing the world we live in, tools for the management and orchestration of hardware and applications are fundamental for making solutions real in the physical world. With the rise of the edge, we’ve been seeing data center technologies like virtual machines, Docker and Kubernetes being extended into the field, but these solutions are not panaceas.

Fit in the Edge Continuum

As the LF Edge community outlined in their 2020 and 2022 taxonomy white papers, the edge is a continuum and infrastructure solutions need to address technical and logistical tradeoffs based on locality. The 2022 paper outlines four key Edge Management and Orchestration (MANO) paradigms:

- Data Center Edge Cloud: Regional, Metro and On-prem Data Centers with a well-defined security perimeter (both physical and network) and a highly-reliable connection between the orchestrator/controller and compute hardware.

- Distributed Edge Cloud: Edge nodes deployed in the field but still capable of supporting technologies like Linux, virtual machines, Docker and Kubernetes. A key difference from the Data Center Edge Cloud is that developers and admins must assume that someone can walk up and tamper with hardware deployed in environments such as the factory floor or out in an oil field. Distributed edge nodes also need to be able to run autonomously if they lose connection to their central controller (based on network availability, or by design in air-gapped scenarios).

- End User Device Edge: Represented by UI-centric PCs and mobile devices that have well established ecosystems with the likes of Windows, Android and iOS.

- Constrained Device Edge: Similar to the Distributed Edge Cloud, but with the added challenge of not having the resources to run traditional data center technologies like Linux, Docker and Kubernetes. These devices are powered by Microcontrollers (MCUs) and lightweight CPUs.

LF Edge MANO Taxonomy

LF Edge MANO Taxonomy

In addition to accommodating for periodic loss of connectivity, software updates for distributed edge assets must work in reverse of how it’s typically done in the data center. While server infrastructure in the data center is typically on a trusted network with its management controller that pushes updates as needed, distributed edge nodes are often deployed on untrusted networks and behind network firewalls and proxies. Therefore, they need to be able to call their central controller and pull updates whenever they have a connection.

In general, the further you move down the edge continuum from the cloud, the more diverse both hardware and software becomes. Computing infrastructure is fairly homogeneous in the cloud, but as you approach the physical world, hardware complexity increases faster than software complexity. Hardware variations include specific form factors, I/O and connectivity, design considerations such as ruggedization, and industry-specific certifications.

Meanwhile, with advancements in software technology, it’s possible to extend cloud principles further than ever before – including to more bespoke hardware in the field. In recent years, we’ve seen LF Edge projects like Project EVE and Open Horizon extend VMs, containerization and Kubernetes to lightweight Linux-based devices. Ocre is now uniquely extending cloud-native principles and app containerization past what we at Atym call the “Linux barrier” to MCU-based devices that have as little as 1MB of memory.

Ocre Fit in Edge MANO Continuum

Ocre Fit in Edge MANO Continuum

The Ocre runtime is also a great alternative to Docker for lightweight, Linux-capable hardware like IoT gateways and networking gear because it frees up 256-512MB of memory for applications. As such, the sweet spot for Ocre is MCU and constrained CPU-based edge devices that have between 1MB and 1GB of memory.

Ocre as an Alternative to Docker for Freeing Up Device Memory

Ocre as an Alternative to Docker for Freeing Up Device Memory

One goal, different concerns

There are also different logistical considerations spanning the continuum. The person that manages edge infrastructure isn’t necessarily the same person that develops and manages apps. And these tasks could be the responsibility of resources both internal and external to your organization, including third-party service providers. Or, the solution could be provided by an OEM that needs to remotely manage their products but also provides access to customers for running their own apps.

The concerns among OT and IT professionals also vary. While OT typically cares most about uptime, quality, and safety, IT is often most concerned about protecting data. The ramifications of a security breach also tend to play out in very different ways. OT attacks tend to have immediate impact on production and potentially safety, whereas IT security breaches play out over long periods of time (for example, in a credit card data leak).

And while it’s typically just a minor annoyance if IT pushes an update that briefly takes down a server or your PC, it’s highly important for updates of critical infrastructure to be coordinated to ensure production uptime and safety. Imagine a production supervisor getting a pop-up alert to the effect of “please save your work, your manufacturing line will reboot in 15 minutes for a system update”!

The “orchestrator of orchestrators”

So, we want developing and deploying apps at the edge to feel like the cloud in terms of platform independence, code portability, continuous software delivery, tight security, and high availability,

For this to happen – and eventually get to a “single pane of glass” – orchestration tools need to be optimized for each of the different edge MANO paradigms, while being coordinated for overall interoperability across the continuum and addressing the specific needs of both OT and IT.

A key enabling concept for this is an “orchestrator of orchestrators” that links together the different tool sets. Here’s where Margo comes in.

Margo is serving a critical need in defining edge orchestration APIs and semantics for both applications and hardware management to foster interoperability across the edge management paradigms. It’s not about eliminating the ability of technology providers to offer differentiated, commercial solutions. Rather, enabling vendors to be able to provide their own proprietary solutions that plug into the architecture, providing they adhere to the boundary conditions established by the project. This in turn facilitates efficiency, scalability, security, uptime and safety.

Ocre Focus in Margo Architecture

Ocre Focus in Margo Architecture

The end goal is to enable developers to build applications that can be securely deployed in as many places along the cloud to edge continuum as possible. Where these portable apps are deployed is then based on a balance of factors such as performance, cost, uptime, and safety and security.

In Conclusion

Wherever you focus in the edge stack, I encourage you to take a look at Margo and join in to help shape the project to meet the needs of OT and IT professionals alike. If you’re interested in learning more about Ocre, dive in through the project website, or drop us a line at Atym where we commercialize the runtime as part of our device edge orchestration solution. Stay tuned for more on our collaboration with the Margo community!